Our team in the Human-Computer Interaction (CS341) elective that I took designed a pair of smart glasses that detect obstacles and a companion app that guides the visually impaired using a smart voice assistant during navigation. We used an iterative design process to empower the visually impaired to feel more independent during navigation by leveraging computer vision and smart voice assistants. This project was a success through close collaboration with my talented teammate, Ewura Abena Asmah. I was responsible for user research and visual and interaction design.

Visually impaired people are faced with the dire challenge of not being able to perceive life as we do. They cannot see the amazing technologies that are invented, the beauty of nature, art, or a person, nor can they see their surroundings to move about freely.

Our objective was to design a pair of smart glasses that empowers visually impaired people to navigate freely. The design leverages the usage of computer vision to detect obstacles and smart voice assistants to offer guidance during navigation.

A pair of smart glasses (Third Eye) that detect obstacles, and a companion app that guides the visually impaired during navigation using a voice assistant.

We used an iterative design process that included empathizing with users through field research, brainstorming ideas, prototyping designs, and testing design concepts that we developed. We started by doing background research to understand the experience that visually impaired users face in the real world.

We read literature that discusses the challenges blind people face in indoor and outdoor environments. We also analyzed design patterns from relevant work that has been done previously to help visually impaired users. Then we conducted field research with proxy users. We asked users open-ended questions while having them blindfolded to immerse users in an experience of the visually impaired. Field research revealed that users felt like a burden because they constantly asked for assistance, even for the things they deemed “too easy to do”. Consequently, users did not want to ask for help often. Users also reported having challenges in navigating, and they could not perceive everything in their surroundings.

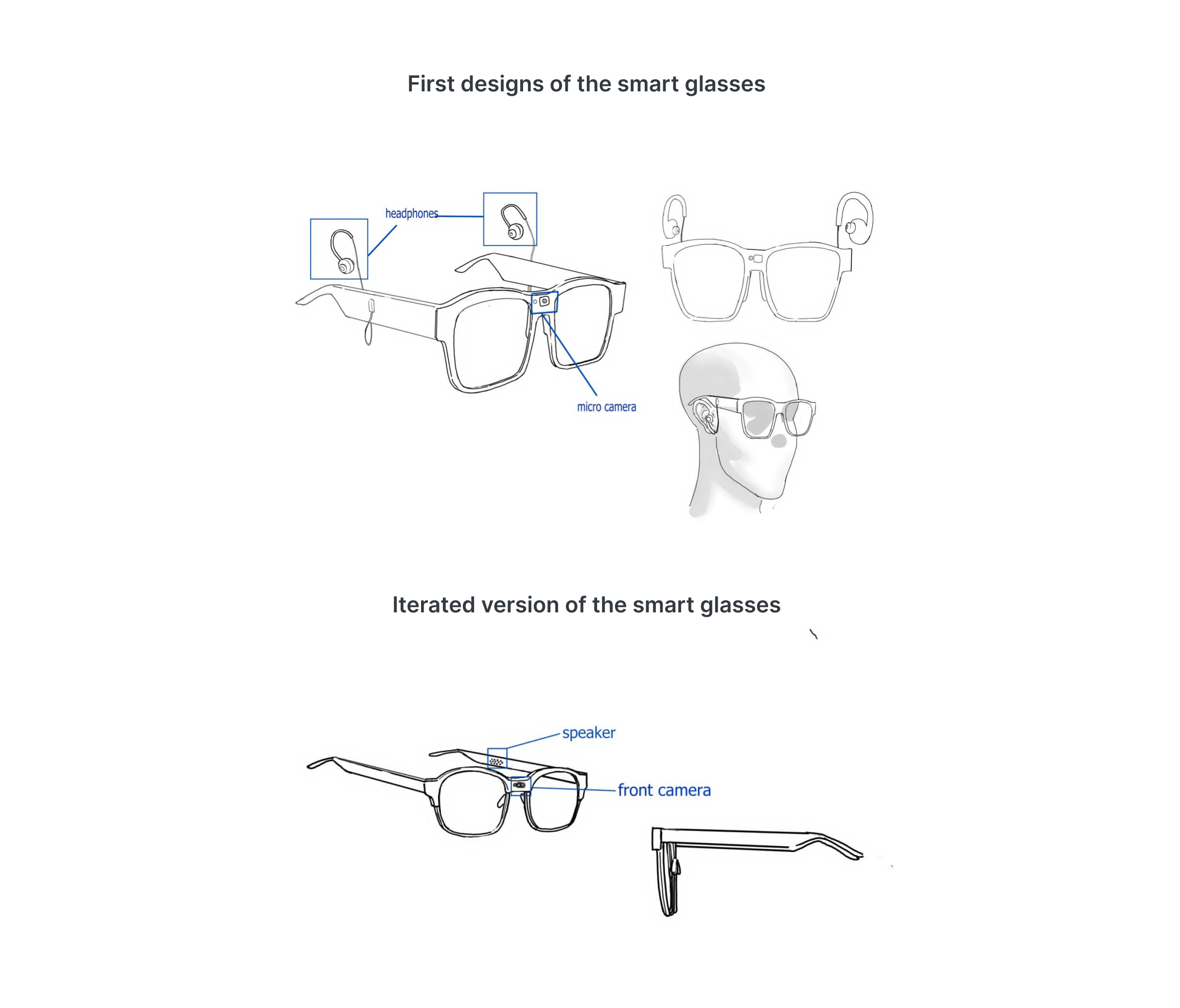

We developed three design concepts that could address some pain points for our users. The ideas included a smart walking stick, a pair of assistive smart glasses, and a system that converts braille into speech. As we progressed with our design process, we realized difficult navigation was one of the most pressing challenges for our users, so we decided to design a pair of assistive smart glasses and a companion app that uses a voice assistant to provide navigation directions. We sketched the glasses and tested the design with proxy users. Prototype evaluations made us realize that users did not like the earbuds on the glasses. Users also did not want to put on earbuds for so long, and they did not want surrounding noise to be cancelled out. As such, we replaced earbuds with speakers embedded within the frames in the iterated version of the glasses.

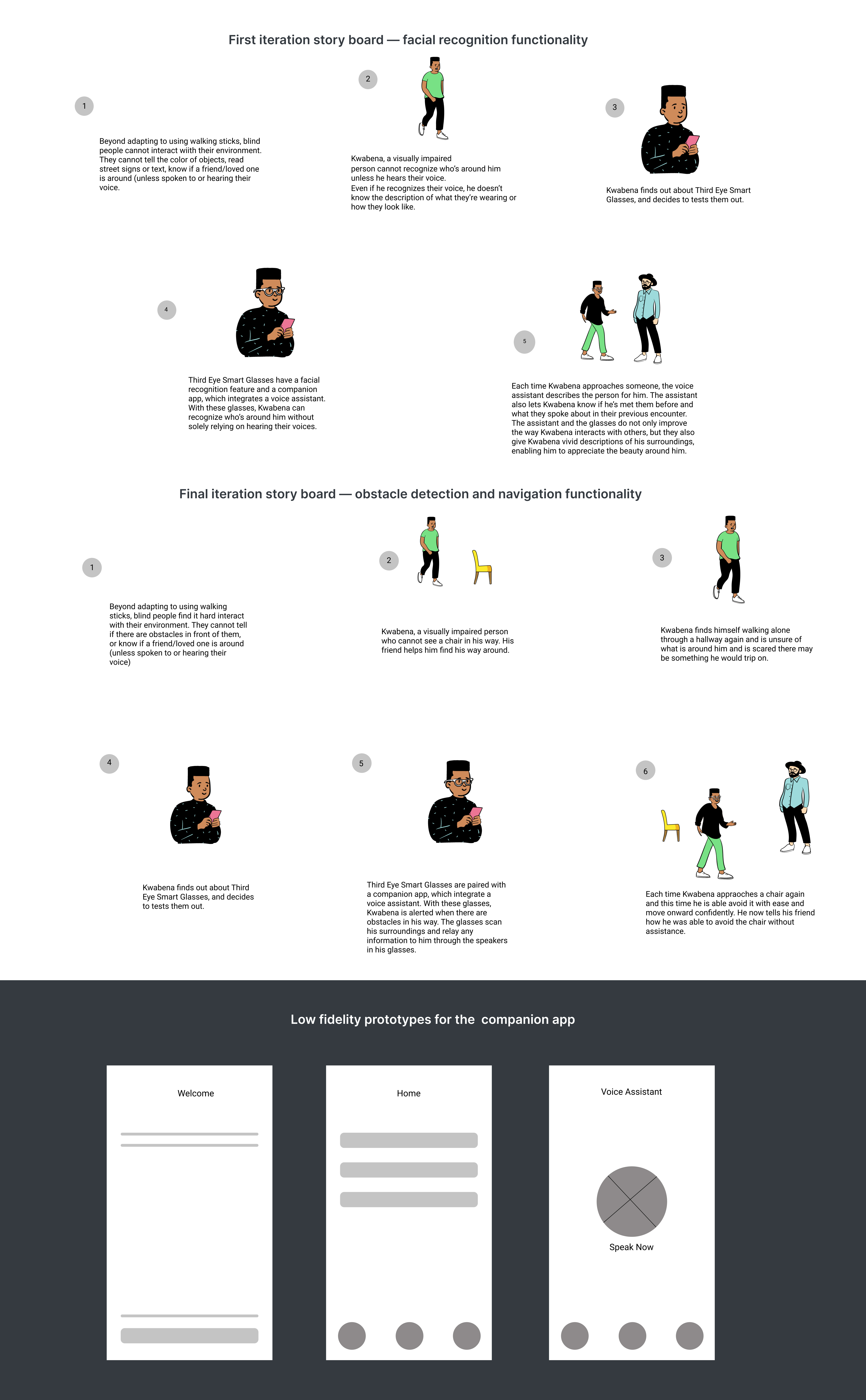

The low-fidelity prototype version for our concept was a storyboard, which we designed to visualize how the smart glasses will work with the companion app. Earlier in our design process, we found out that our visually impaired users wanted another way of recognizing the people around them without solely relying on hearing voices. As such, we focused on designing a facial recognition feature for Third Eye smart glasses to test if we could help blind users in recognizing those around them. However, testing this feature made us realize that we were not addressing the main pain points for users, so we shifted our focus to navigation in the second and third iterations. We also designed prototypes for the companion app with a voice assistant users can use to issue voice commands during navigation.

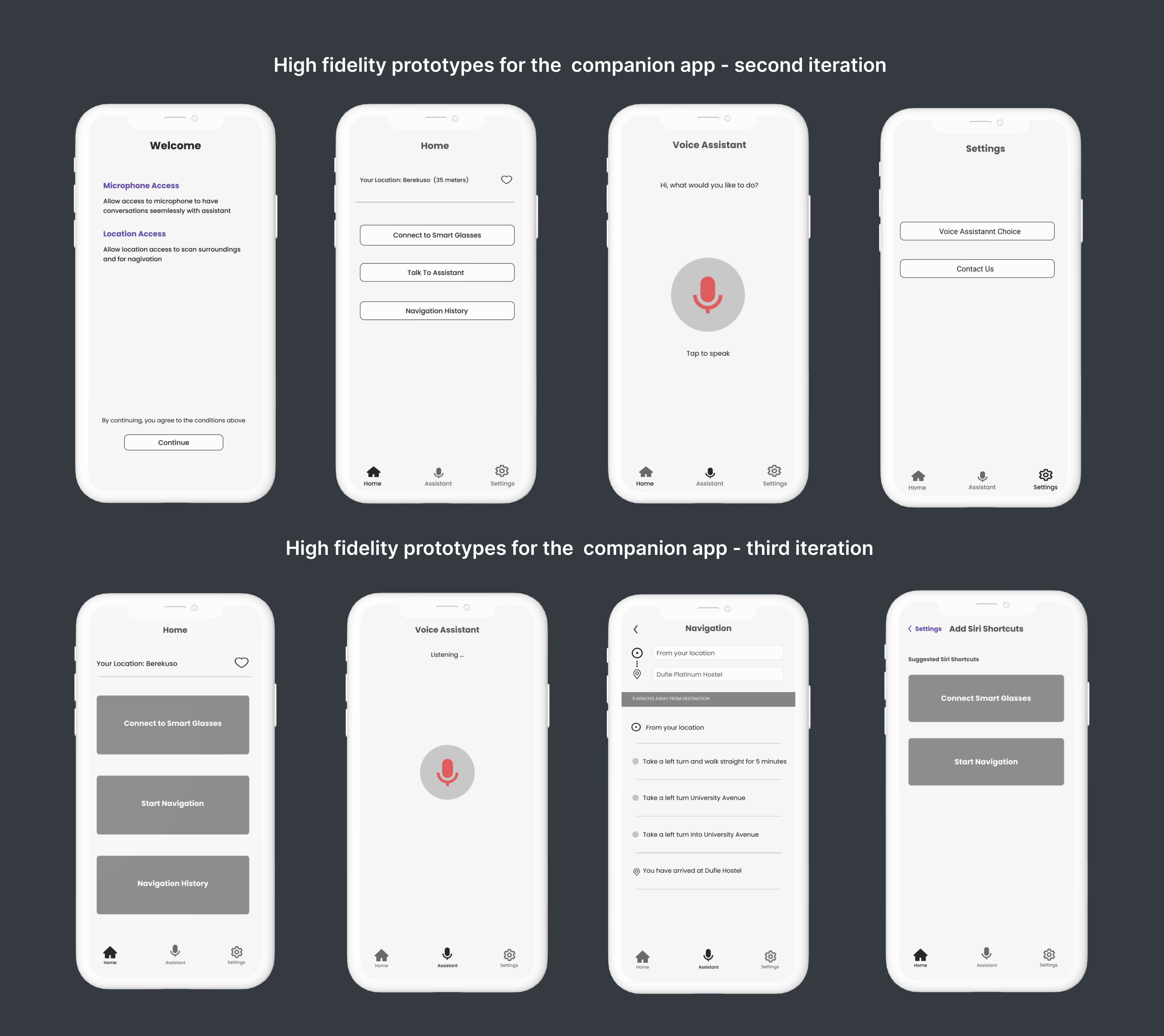

The final high-fidelity prototype for Third Eye is a video demonstrating how users interact with the voice assistant on the companion app while showing how the glasses assist visually impaired users to move around. The prototype also demonstrates how the design provides users with feedback to notify them if there are obstacles in their path. We designed the prototype in three iterations to address the issues that emerged in earlier versions of the design. For instance, testing earlier iterations of the proposed design with users revealed that the facial recognition functionality did not address the main pain point for users, which was difficulty in navigation. Users also seemed confused about how facial recognition was supposed to work. Hence, we shifted the focus of our design to assist users with navigation. Further usability evaluation revealed that the companion app was text-heavy. Consequently, we made the user interface of the app voice-based and with fewer menu options, less text, and larger buttons in the final iteration. We also removed user onboarding since we were no longer considering a subscription model for our solution.

Working on this project taught me not to jump straight into solutions but rather focus more on the entire design process to understand the problems that users are facing. The iterative design process enabled us to empathize with users and understand their problems better while addressing their actual pain points. The project also helped us appreciate how to validate design ideas by doing more testing with users.